My sincere appreciation to Vlad Zamfir for presenting the notion of by-block consensus and persuading me of its advantages, along with numerous other fundamental concepts of Casper, and to Vlad Zamfir and Greg Meredith for their ongoing contributions to the protocol

In the previous entry of this series, we examined one of the two primary feature sets of Serenity: an elevated level of abstraction that significantly enhances the platform’s adaptability and migrates Ethereum from “Bitcoin plus Turing-complete” toward “general-purpose decentralized computation.” Now, let us focus on the other flagship feature, which was the original incentive for creating the Serenity milestone: the Casper proof of stake algorithm.

Consensus By Bet

The pivotal mechanism of Casper is the establishment of a fundamentally novel philosophy in the domain of public economic consensus: the notion of consensus-by-bet. The primary concept of consensus-by-bet is straightforward: the protocol presents chances for validators to wager against the protocol concerning which blocks are set to be confirmed. A wager on a particular block X in this framework is a transaction that, according to protocol regulations, awards the validator a reward of Y coins (which are merely generated from nothing, hence “against the protocol”) in all realities in which block X was executed but imposes a penalty of Z coins (which are forfeited) in all realities in which block X was not executed.

The validator will be inclined to make such a wager only if they perceive that block X has a sufficiently high likelihood of being executed in the reality that matters to users to make the tradeoff beneficial. And then comes the economically recursive enjoyable aspect: the reality that users care about, i.e., the state that users’ clients display when users wish to check their account balance, the status of their contracts, etc., is itself derived by examining which blocks users are betting on the most. As a result, each validator’s motivation is to bet based on their expectations of how others will bet in the future, catalyzing the path towards convergence.

An insightful analogy is to consider proof of work consensus – a protocol which, when observed in isolation, appears highly distinctive, yet can actually be effectively represented as a very specific subset of consensus-by-bet. The reasoning is as follows. When you are mining on a block, you are incurring electricity costs E per second in hopes of achieving a chance p per second of generating a block and obtaining R coins across all forks that include your block, while receiving no rewards on all other chains:

Thus, every second, you achieve an expected profit of p*R-E on the blockchain you are mining on, and incur a loss of E on all other chains; this can be interpreted as placing a bet at E:p*R-E odds that the chain you are mining on will “triumph”; for instance, if p is 1 in 1 million, R is 25 BTC ~= $10000 USD, and E is $0.007, then your earnings per second on the successful chain are 0.000001 * 10000 – 0.007 = 0.003, your losses on the unsuccessful chain amount to the electricity cost of 0.007, and consequently, you are wagering at odds of 7:3 (or a 70% probability) that the chain you are mining on will prevail. It’s essential to note that proof of work meets the economic requirement of being “recursive” in the manner described earlier: users’ clients will compute their balances by processing the chain with the most proof of work (i.e., bets) behind it.

Consensus-by-bet can be perceived as a framework that incorporates this perspective on proof of work, and can also be modified to create an economic game to promote convergence across several other types of consensus protocols. Conventional Byzantine-fault-tolerant consensus protocols, for instance, often include the idea of “pre-votes” and “pre-commits” prior to the final “commit” to a specific outcome; within a consensus-by-bet model, each phase can be regarded as a wager, thereby providing participants in subsequent stages with enhanced assurance that participants in earlier stages are genuine in their actions.

It may also be utilized to encourage proper conduct in out-of-band human consensus, if necessary, to counteract extreme situations such as a 51% assault. If someone acquires half the coins on a proof-of-stake network and launches an attack, then the community only needs to coordinate on a framework where clients disregard the attacker’s fork, and both the attacker and anyone colluding with the attacker automatically forfeit all their coins. An ambitious goal would be to automate these forking decisions through online nodes – if successfully accomplished, this would integrate into the consensus-by-bet framework the often overlooked yet crucial outcome from traditional fault tolerance research that, under strong synchrony conditions, even if nearly all nodes intend to undermine the system, the remaining nodes can still reach consensus.

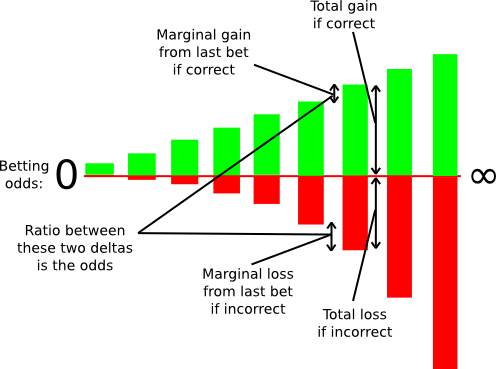

Within the framework of consensus-by-bet, distinct consensus protocols vary solely in one aspect: who is permitted to wager, at which odds, and how much? In proof of work, there exists only one type of wager available: the opportunity to bet on the chain featuring one’s own block at odds E:p*R-E. In generalized consensus-by-bet, we can implement a mechanism known as a scoring rule to effectively offer an infinite array of wagering opportunities: one infinitesimally small wager at 1:1, one infinitesimally small wager at 1.000001:1, one infinitesimally small wager at 1.000002:1, and so on.

A scoring rule represented as an infinite number of wagers.

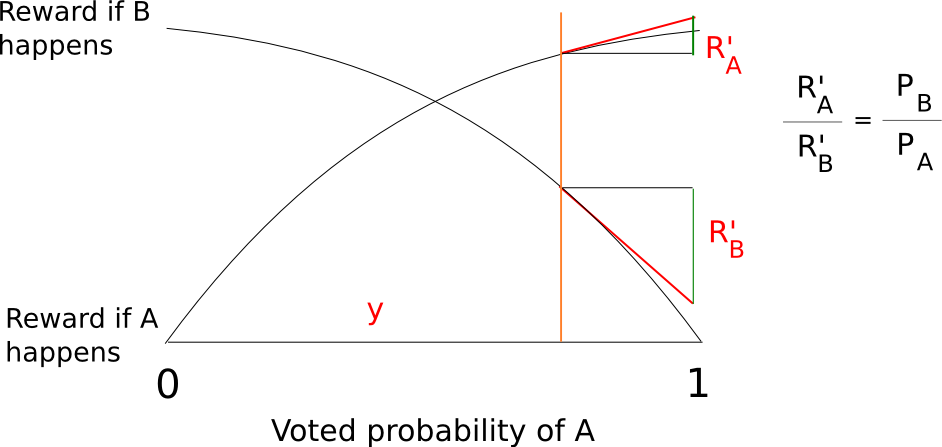

One can still determine precisely how substantial these infinitesimal marginal bets are at each probability level, but in general, this method enables us to draw a very accurate assessment of the probability that a particular validator believes a certain block is likely to be confirmed; if a validator estimates that a block will be confirmed with a probability of 90%, they will accept all the wagers below 9:1 odds and reject all wagers above 9:1 odds, allowing the protocol to deduce this “viewpoint” that the likelihood of block confirmation is 90% with precision. In fact, the revelationprinciple indicates that we could simply request the validators to provide a signed message expressing their “opinion” regarding the likelihood of the block being confirmed right away, allowing the protocol to determine the bets on behalf of the validators.

Thanks to the marvels of calculus, we are able to devise rather straightforward functions that calculate a total reward and penalty at each probability level, which are mathematically equivalent to aggregating an infinite collection of bets at all probability levels beneath the validator’s proclaimed confidence. A relatively simple illustration is s(p) = p/(1-p) and f(p) = (p/(1-p))^2/2 where s determines your reward if the event you are wagering on occurs, and f determines your penalty if it does not.

A significant benefit of the generalized method for consensus-by-bet is this. In proof of work, the amount of “economic weight” supporting a particular block increases only linearly over time: if a block has six confirmations, then reversing it only costs miners (in equilibrium) approximately six times the block reward, and if a block contains six hundred confirmations, then reverting it costs six hundred times the block reward. In generalized consensus-by-bet, the economic weight that validators commit to a block could rise exponentially: if most other validators are willing to bet at 10:1, you might feel confident to stake at 20:1, and once nearly everyone bets 20:1, you could aim for 40:1 or higher. Thus, a block may very well achieve a level of “de-facto complete finality,” where the entire deposits of validators are at risk backing that block, in as little as a few minutes, depending on how audacious the validators are (and how much the protocol motivates them to be).

Blocks, Chains and Consensus as Tug of War

Another distinctive aspect of how Casper operates is that instead of consensus being by-chain, as observed in current proof of work protocols, consensus occurs by-block: the consensus mechanism reaches a conclusion about the status of the block at each height independently from every other height. This method does introduce certain inefficiencies – notably, a bet must express the validator’s opinion on the block at every height rather than merely the head of the chain – but it turns out to be much simpler to implement strategies for consensus-by-bet in this framework, and it also has the added benefit of being much more accommodating to high blockchain velocity: theoretically, one can even achieve a block time that is quicker than network propagation with this model, since blocks can be created independently of each other, though with the clear stipulation that block finalization will still require some additional time.

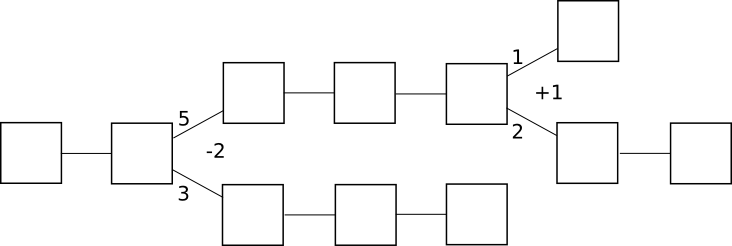

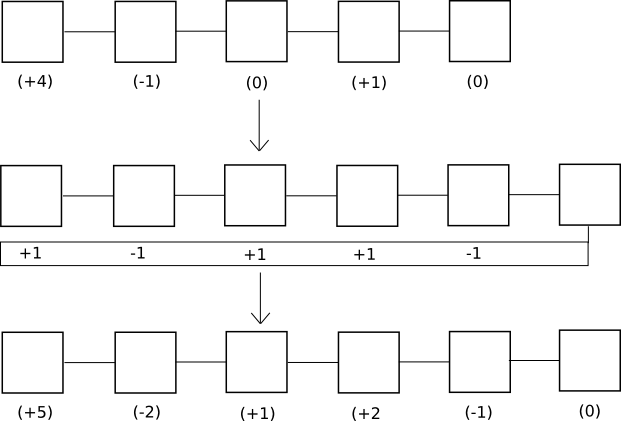

In by-chain consensus, one can perceive the consensus process as a form of tug-of-war between negative infinity and positive infinity at each fork, where the “status” at the fork signifies the number of blocks in the longest chain on the right side minus the number of blocks on the left side:

Clients attempting to ascertain the “correct chain” simply progress from the genesis block, and at each fork move left if the status is negative and right if the status is positive. The economic incentives in this situation are also evident: once the status turns positive, there is a strong economic urge for it to trend towards positive infinity, albeit very gradually. If the status turns negative, there is substantial economic pressure for it to trend toward negative infinity.

As an aside, under this framework, the fundamental concept behind the GHOST scoring rule emerges as a natural generalization – rather than simply counting the length of the longest chain for the status, one should count every block on each side of the fork:

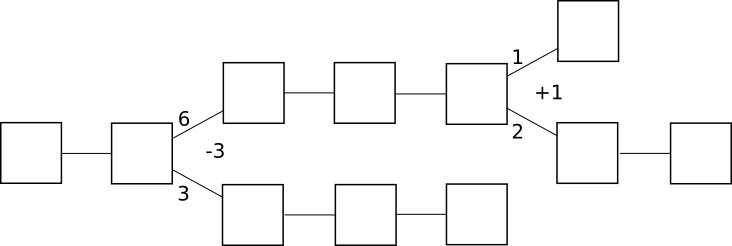

In by-block consensus, the tug of war returns, but this time the “status” is merely an arbitrary number that can be increased or decreased by specific actions related to the protocol; at every block height, clients process the block if the status is positive and do not process it if the status is negative. Note that although proof of work is currently by-chain, it doesn’t have to be: one can easily envision a protocol where instead of supplying a parent block, a block with a valid proof of work solution must provide a +1 or -1 vote on every block height in its history; +1 votes would only be rewarded if the block that was voted on is processed, and -1 votes would be rewarded only if the block that was voted on does not get processed:

Naturally, in proof of work such a design would not function effectively for a straightforward reason: if you are required to vote on absolutely every previous height, then the volume of voting required will increase quadratically with time and rapidly grind the system to a halt. However, with consensus-by-bet, as the tug of war can converge to complete finality exponentially, the voting overhead becomes significantly more manageable.

One unexpected outcome of this mechanism is that a block can remain unconfirmed even while subsequent blocks are fully finalized. This may appear as a considerable efficiency loss; if there is one block whose status is fluctuating with ten blocks above it, each fluctuation would require recalculating state transitions for the entire chain of ten blocks. However, note that in a by-chain model, the exact same scenario can occur between chains as well, and the by-block version actually offers users more information: if their transaction was confirmed and finalized in block 20101, and they know that regardless of the contents of block 20100, that transaction will yield a specific result, then the result that they care about is finalized even if parts of the history preceding the result are not. By-chain consensus algorithms can never offer this attribute.

So how does Casper work anyway?

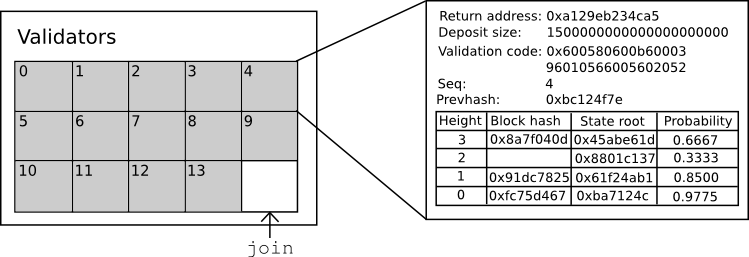

In any security-deposit-based proof of stake protocol,there exists an ongoing collection of bonded validators, which is monitored as part of the state; to place a bet or undertake one of several essential actions in the protocol, you need to be included in the set so that penalties can be applied if you act improperly. Entering and exiting the set of bonded validators are both unique transaction categories, and key actions within the protocol, such as bets, are also categorized as transactions; bets can be relayed as standalone items across the network, but they may also be incorporated into blocks.

In line with Serenity’s ethos of abstraction, all of this is actualized through a Casper contract, which encompasses functions for placing bets, joining, withdrawing, and retrieving consensus data, allowing users to submit bets and perform other functions simply by invoking the Casper contract with the required information. The state of the Casper contract appears as follows:

The contract monitors the current group of validators, and for each validator, it records six primary details:

- The return address for the validator’s deposit

- The existing amount of the validator’s deposit (note that the bets placed by the validator will alter this value)

- The validator’s validation code

- The sequence number of the latest bet

- The hash of the most recent bet

- The validator’s opinion table

The term “validation code” represents another abstraction concept in Serenity; while other proof of stake systems necessitate validators to utilize a specific signature verification algorithm, the Casper implementation within Serenity permits validators to define a segment of code that accepts a hash and a signature, returning either 0 or 1, and before approving a bet, it verifies the hash of the bet against its signature. The default validation code employs an ECDSA verifier, but one can also explore alternative verifiers: multisig, threshold signatures (potentially beneficial for forming decentralized stake pools!), Lamport signatures, and more.

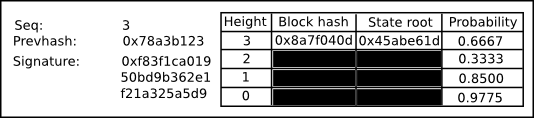

Every bet must include a sequence number that is one greater than the preceding bet, and each bet must have a hash of the prior bet; thus, one can consider the succession of bets made by a validator as a form of “private blockchain”; perceived in that light, the validator’s opinion effectively represents the state of that chain. An opinion delineates:

- What the validator perceives to be the most probable state root at any specified block height

- What the validator considers to be the most plausible block hash at any specified block height (or zero if no block hash exists)

- The likelihood that the block corresponding to that hash is finalized

A bet is an object that resembles the following:

The essential details include:

- The sequence number of the bet

- The hash of the previous bet

- A signature

- A list of modifications to the opinion

The function within the Casper contract that handles a bet consists of three parts. Initially, it validates the sequence number, prior hash, and signature of a bet. Following this, it refreshes the opinion table with any new data provided by the bet. Typically, a bet should update a few recent probabilities, block hashes, and state roots, so much of the table is likely to remain unchanged. Finally, it applies the scoring rule to the opinion: if the opinion indicates that you consider a particular block to have a 99% probability of finalization, and in the specific context that this contract is operating in, the block was finalized, then you may earn 99 points; conversely, you could lose 4900 points.

It’s important to note that, since the operation of this function within the Casper contract occurs as part of the state transition function, this process is completely aware of all previous blocks and state roots within the context of its own environment; even if, from the perspective of the external world, the validators proposing and voting on block 20125 have no way of knowing if block 20123 will be finalized, when the validators return to processing that block, they will be—or perhaps they could process both scenarios and later choose to adhere to one. To prevent validators from submitting differing bets to various environments, we impose a straightforward slashing condition: if you place two bets with the identical sequence number, or if you submit a bet that cannot be processed by the Casper contract, you forfeit your entire deposit.

Exiting the validator pool involves two steps. First, one must submit a bet with a maximum height of -1; this effectively terminates the series of bets and initiates a four-month countdown timer (20 blocks / 100 seconds on the testnet) before the bettor can reclaim their funds by invoking a third method, withdraw. The withdrawal can be executed by anyone and sends funds back to the same address that initiated the original join transaction.

Block proposition

A block consists of (i) a number indicating the block height, (ii) the address of the proposer, (iii) a transaction root hash, and (iv) a signature. For a block to be considered valid, the proposer address must match the validator assigned to create a block at the given height, and the signature must be verifiable against the validator’s own validation code. The timeframe to submit a block at height N is calculated by T = G + N * 5 where G represents the genesis timestamp; thus, a block should normally appear every five seconds.

An NXT-style random number generator is utilized to ascertain who can produce a block at each height, fundamentally involving taking missing block proposers as a source of randomness. The rationale for this method is that although this randomness can be manipulated, such manipulation incurs significant costs: one must relinquish the right to create a block and collect transaction fees to exert such influence. If deemed absolutely necessary, the cost of manipulation can be elevated further by substituting the NXT-style RNG with a RANDAO-like protocol.

The Validator Approach

How does a validator function within the Casper protocol? Validators engage in two main types of actions: creating blocks and placing bets. Block creation occurs independently of everything else: validators compile transactions, and at the appropriate moment to generate a block, they execute the task, sign it, and distribute it to the network. The process of placing bets is more intricate. The current standard validator approach in Casper is crafted to replicate elements of conventional Byzantine-fault-tolerant consensus: observe how other validators are wagering, take the 33rd percentile, and adjust closer towards 0 or 1 from that position.

In order to achieve this, each validator monitors and tries to keep as informed as possible on the bets being placed by all other validators and tracks the prevailing opinion of each. If there are no or limited opinions regarding a specific block height from other validators, then it follows an initial algorithm that resembles the following:

- If the block is absent, but the present time is still quite close to when the block should have been published, wager 0.5

- If the block is not present, but a significant amount of time has elapsed since it should have been published, wager 0.3

- If the block is present and it arrived punctually, wager 0.7

- If the block is available, but it arrived either far too early or excessively late, wager 0.3

Some randomness is interjected to help mitigate “stuck” situations, yet the fundamental principle remains unchanged.

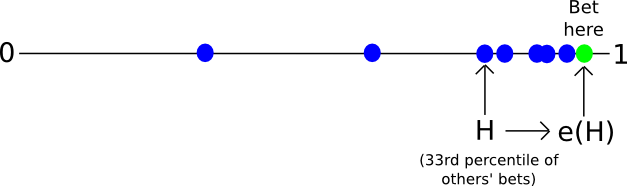

If there are already many opinions on a specific block height from other validators, we employ the following strategy:

- Let L be the value such that two-thirds of validators are betting above L. Let M denote the median (that is, the value such that half of validators are betting above M). Let H be the value at which two-thirds of validators are betting below H.

- Let e(x) represent a function that makes x more “extreme”, that is, pushes the value away from 0.5 and towards 1. A straightforward example is the piecewise function e(x) = 0.5 + x / 2 if x > 0.5 else x / 2.

- If L > 0.8, wager e(L)

- If H , wager e(H)

- Otherwise, wager e(M), though constrain the result within the range [0.15, 0.85] so that fewer than 67% of validators cannot compel another validator to shift their bets excessively

Validators have the autonomy to select their own level of risk aversion within the scope of this strategy by determining the shape of e. A function where f(e) = 0.99999 for e > 0.8 could function (and would likely provide behavior similar to Tendermint), but it poses slightly higher risks and enables hostile validators representing a significant part of the bonded validator set to mislead these validators into forfeiting their entire deposit at a minimal expense (the attack strategy would involve betting 0.9, deceiving the other validators into wagering 0.99999, and then reverting back to betting 0.1 to force the system to converge to zero). Conversely, a function that converges very slowly will experience greater inefficiencies when not under attack, as finality will be delayed, causing validators to need to continue betting on each height for a longer period.

So, how does a client ascertain what the current state is? Essentially, the process unfolds as follows. It begins by downloading all blocks and all bets. Then, it utilizes the same algorithm as previously mentioned to build its own view, but does not publish it. Instead, it sequentially examines each height, processing a block if its probability exceeds 0.5 and bypassing it otherwise; the state after executing all of these blocks is regarded as the “current state” of the blockchain. The client can also establish a subjective understanding of “finality”: when the opinion at every height up to a certain k is either above 99.999% or below 0.001%, then the client deems the first k blocks finalized.

Additional Research

There remains considerable research to conduct regarding Casper and generalized consensus-by-bet. Specific areas include:

- Developing results to demonstrate that the system economically motivates convergence, even amidst some presence of Byzantine validators

- Identifying optimal validator strategies

- Ensuring that the mechanism for incorporating the bets in blocks is not vulnerable to exploitation

- Enhancing efficiency. Currently, the POC1 simulation accommodates approximately 16 validators operating concurrently (an increase from around 13 a week prior), although ideally, we should aim to maximize this number (note that the number of validators the system can support on a live network should be roughly the square of the POC performance, as the POC runs all nodes on a single machine).

The forthcoming article in this series will focus on initiatives to introduce scalability scaffolding into Serenity and is expected to be released around the same time as POC2.